| author: | zhaoyue-zephyrus |

| score: | 8 / 10 |

This paper attempts to scale up CNN for better accuracy with a wide range of resource budget in principled way.

It has two main contributions:

-

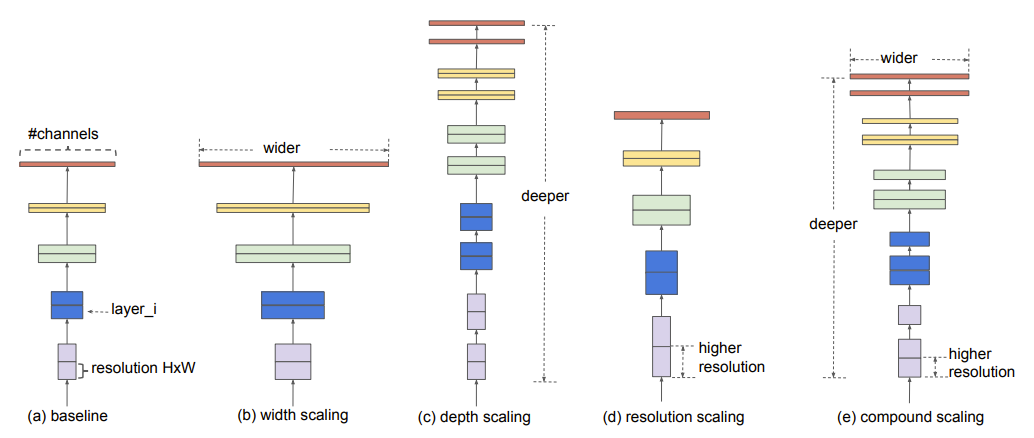

It unifies some common network scaling factors (depth, width, and input resolution) into one single factor, called compound coefficient. The compound coefficient \(\phi\) can be used to scale network depth, width, and input resolution using the following way:

\[\text{depth}: d = \alpha^\phi \\ \text{width}: w = \beta^\phi \\ \text{resolution}: r = \gamma^\phi,\]where \(\alpha, \beta, \gamma\) are constants constrained by \(\alpha\cdot\beta^2\cdot\gamma^2 \approx 2, \alpha \geq 1, \beta \geq 1, \gamma \geq 1\). The constraint indicates that FLOPs will approximately increase by \(2^\phi\) as the compound coefficient scales up.

\(\alpha, \beta, \gamma\) have multiple choices, so a grid search is performed for accuracy.

-

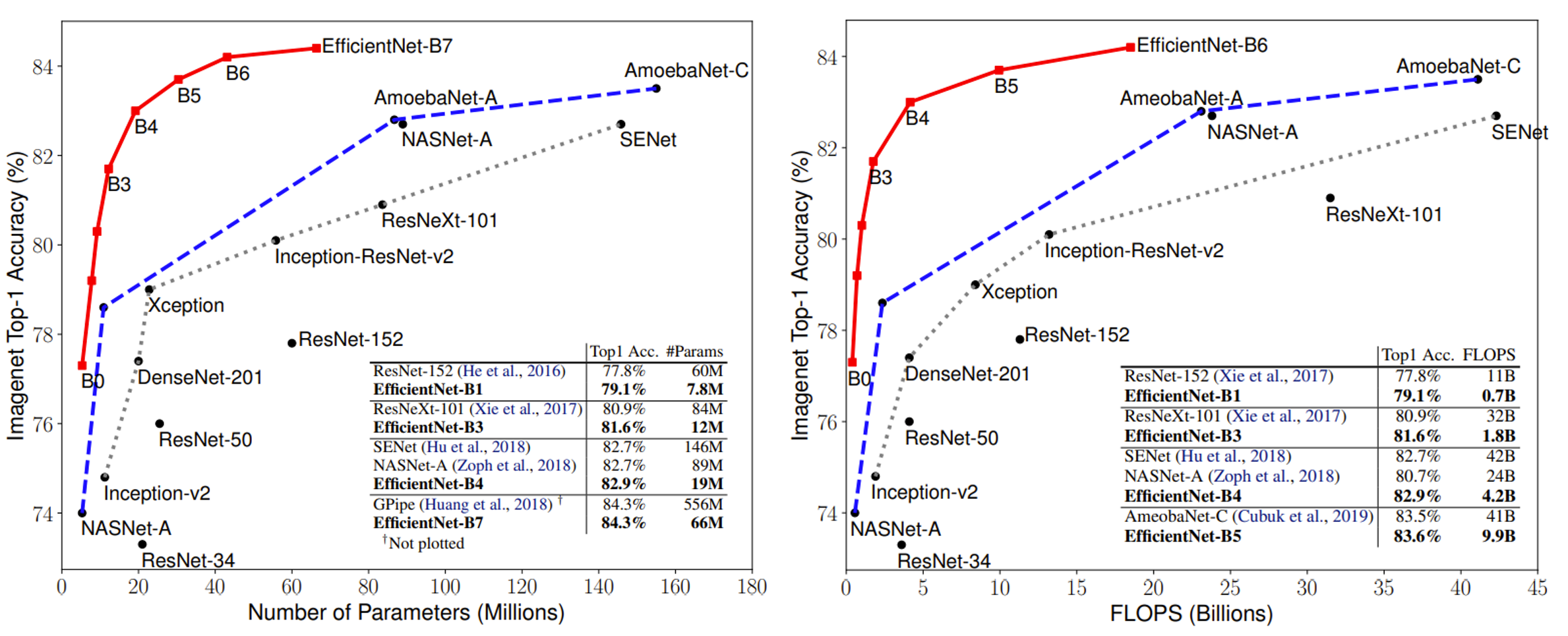

Scaling up with compound coefficient brings noticable gains compared with using a single factor for both MobileNet and ResNet. However, MobileNet and ResNet might not be an optimal base architecture to start with. Therefore the authors propose a new base network by neural architecture search (NAS) which optimizes both accuracy and FLOPs. The new network, named EfficientNet-B0, has similar building blocks with MobileNetV2 except that the hyper-parameters are searched.

Combined with the compound scaling method, a family of EfficientNet from B1 to B7 can be obtained.

TL;DR

- A compound coefficient to unify existing network scaling factors.

- A base network EfficientNet-B0 obtained from NAS and a family of EfficientNet-B1 to -B7 that is scaled up by compound coefficients.

- Significant improvement of accuracy-FLOP/#param tradeoff.