| author: | philkr |

| score: | 10 / 10 |

This is the paper that started the deep learning revolution in 2012.

From a core technical level, the architecture looks quite similar to LeNet-5, just bigger.

There are a few core difference when scaling up the architecture:

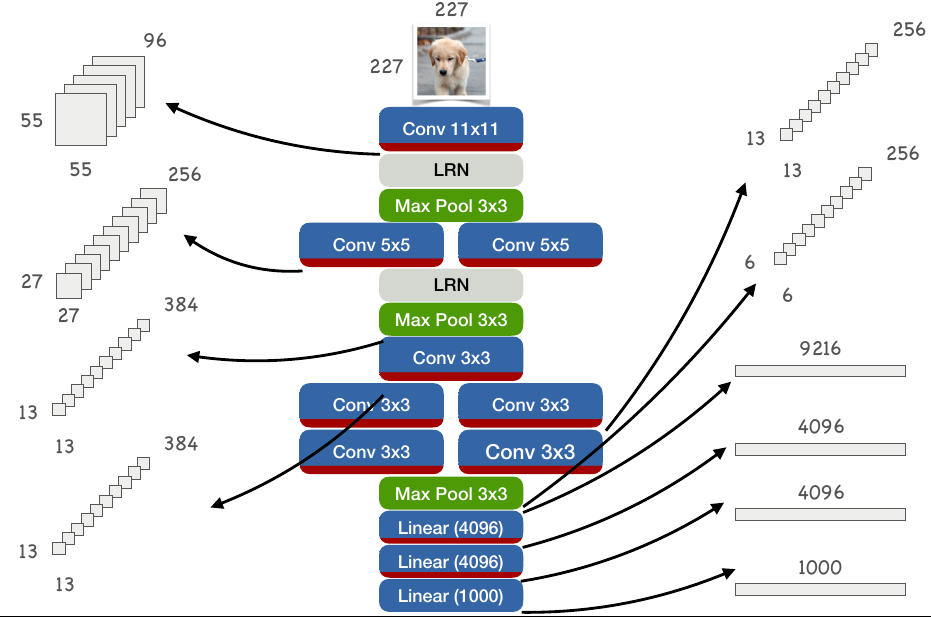

- The network uses 5 conv layers and 3 linear (fully-connected) layers

- 2 conv and 1 FC more than LeNet-5

- It uses max-pooling

- Pooling is overlapping with an odd kernel size

- The first kernel is larger with stride \(4\)

- Reduces computation, and allows for higher input resolution

- All but the first convolution use padding

By itself the AlexNet architecture likely didn’t train, and required a fairly large bag of tricks (accumulated over the past 12 years: 2000-2012) to work:

- ReLU activations

- They do not saturate and pass the gradient back through the network

- Normalizations after the first two conv layers

- This helped gradient back-propagation to initial layers

- Later papers showed that a good initialization makes this normalization obsolete

- This was one of the first papers to train on GPUs

- The architecture is split in the middle each half resides on a different GPU

- Data-augmentation: flip, crop, and color augmentations

- Dropout on the first two FC layers

- Reduces overfitting

Most of the architectural changes and training tricks were well known in the deep learning literature by 2012. Where the paper really shines is the performance and experiments. The paper showed for the first time that deep networks can easily outperform classical methods and hand-designed features in computer vision tasks. The imagenet result shows a huge improvement over the prior SOTA (up to 10% improvement in top-5 accuracy). Within a year almost all of computer vision switched to AlexNet-like architectures.

TL;DR

- AlexNet: Larger LeNet-5 architecture

- Bag of many network training tricks

- Stellar performance on ImageNet