Max Pooling

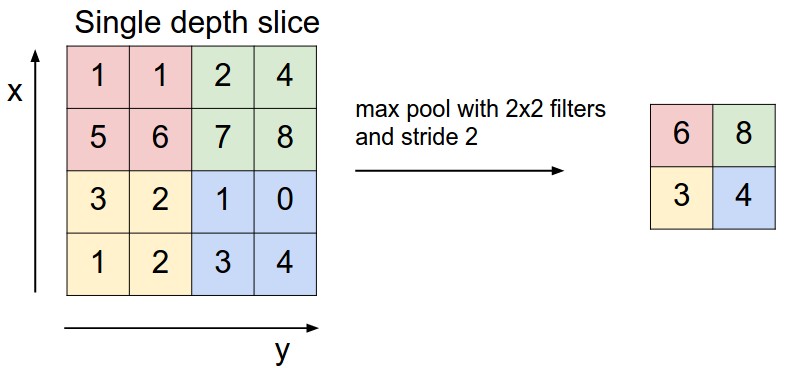

Max Pooling layer is a “convolutional”-like operation that takes as input as tensor of size (N, C, H, W) and outputs a tensor of size (N, C, Hout, Wout) where \(N\) means the size of batches, \(C\) the number of channels and \(H, W\) the width and height of the input. Like average pooling, max pooling is used to progressively reduce the size of representations. However unlike average pooling, max pooling returns the maximum activation for each channel in the sliding window of size \(K\). Refer to “convolutional”-like operations for definitions of “\(K\)”.

credit: cs231n.github.io/convolutional-networks

PyTorch

>>> m = nn.MaxPool2d(3, stride=2)

>>> m = nn.MaxPool2d((3, 2), stride=(2, 1))

>>> input = torch.randn(20, 16, 50, 32)

>>> output = m(input)